AI-powered tools and platforms are becoming increasingly common. Amidst this user trust has emerged as one of the biggest challenges – especially in markets or industries that have historically shown low confidence in automation. Whether it’s a banking app using machine learning to flag suspicious transactions or an HR system using AI to screen candidates, skepticism often stands in the way of adoption.

In regions where technological infrastructure has gaps, or industries where human judgment has long been considered the gold standard, people tend to approach AI systems with caution. Some worry about accuracy. Others fear job loss, surveillance, or lack of control. The concerns are real – and if product teams don’t take them seriously, adoption will stay low, no matter how advanced the technology is.

This is where UX plays a crucial role. Good user experience design doesn’t just make products easier to use; it can actively help shift perceptions and build trust. In low-trust environments, UX must do more than guide – it must reassure, explain, and empower.

Understanding the Roots of Distrust

Before diving into design strategies, it’s important to understand what drives distrust in the first place:

- Lack of Transparency: Users often don’t know how an AI system makes decisions. If the outcomes feel random or unexplainable, trust erodes quickly. In fact, a KPMG report reveals that over 54% of global users are wary about trusting AI.

- Past Negative Experiences: In many emerging markets, digital systems have failed users in the past – through data leaks, bugs, or misuse of automation.

- Fear of Loss of Control: Users may feel that AI takes decisions out of their hands. This is especially true in industries like healthcare or law where judgment and context are critical.

- Cultural Attitudes: In some cultures, there’s a strong preference for personal interactions or human oversight, especially in finance, education, and governance.

Designing for these realities means building a product that doesn’t just work—but one that clearly communicates how it works, involves users in decision-making, and earns credibility over time.

Make the AI Visible, But Not Intimidating

AI works best when it doesn’t feel mysterious. A common issue with AI-powered tools is that they do too much behind the scenes. Users are given an output, but no understanding of how it was reached. According to a global study, people who understand how AI works are more likely to trust it, use it confidently, and recognize its benefits.

Designers can address this with “explainable UX.” For example, if an AI recommends a product, flags a document, or adjusts a setting, the interface should offer a simple explanation—such as: “Based on your previous purchases and location.”

You don’t need to overwhelm users with technical details. But a plain-language summary of why a suggestion was made gives the system a human-like clarity.

When users can “see” the AI’s thinking—even at a high level—they’re more likely to trust it.

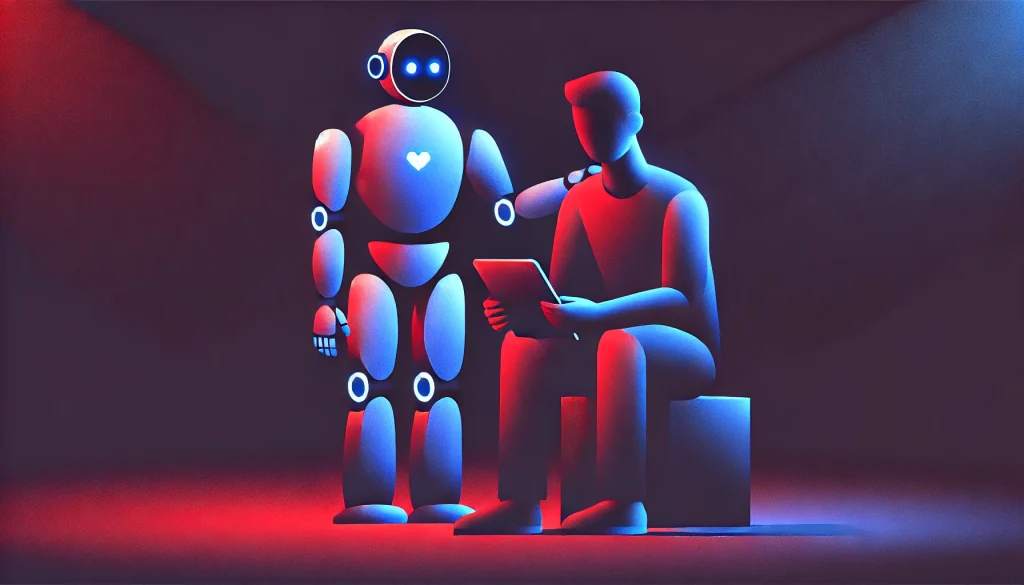

Give Users Control — Even Symbolic

In low-trust markets, people may not want full automation. They often prefer tools that assist them, not replace them. One effective UX strategy is to provide “adjustable autonomy.”

Let users override AI recommendations or customize how much automation they want. In a credit scoring app, for example, show both the automated risk assessment and a manual review option. In a smart agriculture tool, allow farmers to adjust AI-recommended irrigation plans based on their own field observations.

Even when users don’t override the AI, the mere ability to do so makes them feel more in control. This strengthens trust and makes adoption more likely.

Use Familiar Patterns and Culturally Sensitive Design

For many users, AI is still new. But that doesn’t mean the rest of the interface has to be. Designing around familiar digital patterns—buttons, icons, flows, language—helps reduce friction.

In low-trust regions, unfamiliar design can be just as unsettling as unfamiliar technology. Cultural context matters. For instance, in parts of South Asia or Africa, voice-based interfaces may feel more natural than text-heavy ones. In government apps, an official visual style may lend credibility, while playful animations might do the opposite.

UX teams must test designs with real users from the target market—not just for usability, but for emotional resonance. Small cues of familiarity can have a big impact on perception.

Highlight Human Oversight

One of the best ways to build trust in AI is to show that it’s not operating in isolation. Reinforce that humans are still involved – especially in sensitive tasks like diagnostics, hiring, or security.

This can be done through copy, interface elements, or workflows. For example:

- “Final decisions are reviewed by our team.”

- “Our experts monitor the system 24/7.”

- Include profile photos or names of human reviewers where appropriate.

When users know there’s a real person behind the screen—or at least checking in on what the machine is doing – it softens fears about unchecked automation.

Provide Feedback Loops

Design should allow users to question or report outputs they don’t agree with. Feedback loops not only make the AI system better over time—they also tell users that their voice matters.

For instance, after an AI suggests a response or rejects an application, the interface can ask: “Was this helpful?” or “Think this is wrong? Let us know.”

This invites users into the system’s evolution. In a low-trust environment, that sense of agency is key to building confidence.

Proactively Address Bias and Errors

AI bias is not just a technical problem—it’s a UX challenge. If users in a certain demographic feel the system consistently treats them unfairly, they will lose trust fast.

Design teams must preempt this by acknowledging limitations. Add tooltips or FAQs explaining that the system is continuously learning, and that errors can be corrected. Use clear, honest copy that doesn’t overpromise accuracy.

Transparency about limitations is more credible than pretending the system is perfect.

Build Slowly and Educate Along the Way

Introducing AI in low-trust markets isn’t about flashy launches. It’s about careful, gradual rollout with user education built into every touchpoint.

Use onboarding flows, tooltips, and support content to explain what the AI does and doesn’t do. Avoid jargon. Use everyday language. Focus on outcomes, not algorithms.

Rather than saying, “We use deep learning models,” say: “This tool learns from what you do, so it can make smarter suggestions next time.”

Education isn’t a one-time feature. It’s a continuous UX investment that pays off in trust and adoption.

Final Thoughts

AI has the potential to improve lives, simplify decisions, and unlock new efficiencies. But in low-trust markets, its success depends not just on how well it works—but how thoughtfully it’s introduced. Good UX design bridges the gap between skepticism and confidence. It doesn’t hide the AI—it humanizes it, explains it, and invites users to grow with it.

Designing for trust isn’t just a feature. It’s a foundation. And in regions or industries where doubt runs high, that foundation must be laid brick by brick—with honesty, clarity, and respect for the user’s agency.

Also Read: UX for AI in Different Industries: Designing Trust, Clarity, and Control